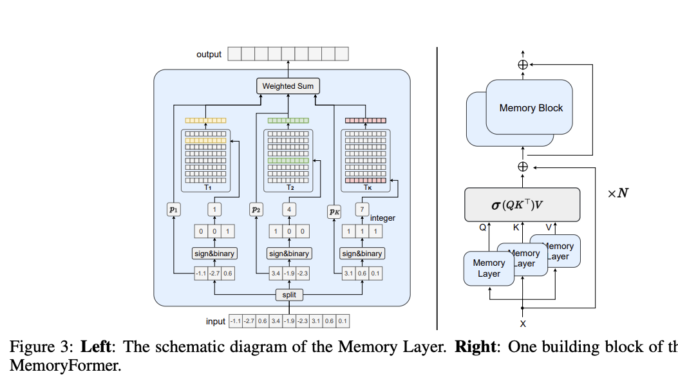

MemoryFormer: A Novel Transformer Architecture for Efficient and Scalable Large Language Models

Transformer models have driven groundbreaking advancements in artificial intelligence, powering applications in natural language processing, computer vision, and speech recognition. These models excel at understanding and generating sequential data by leveraging mechanisms like multi-head attention […]