Large Language Models (LLMs) have achieved remarkable advancements in natural language processing (NLP), enabling applications in text generation, summarization, and question-answering. However, their reliance on token-level processing—predicting one word at a time—presents challenges. This approach contrasts with human communication, which often operates at higher levels of abstraction, such as sentences or ideas.

Token-level modeling also struggles with tasks requiring long-context understanding and may produce outputs with inconsistencies. Moreover, extending these models to multilingual and multimodal applications is computationally expensive and data-intensive. To address these issues, researchers at Meta AI have proposed a new approach: Large Concept Models (LCMs).

Large Concept Models

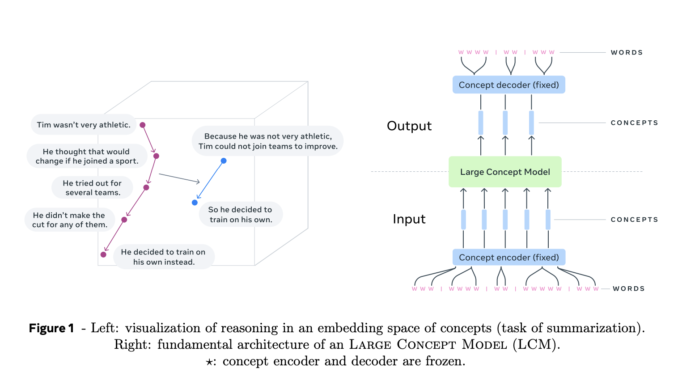

Meta AI’s Large Concept Models (LCMs) represent a shift from traditional LLM architectures. LCMs bring two significant innovations:

High-dimensional Embedding Space Modeling: Instead of operating on discrete tokens, LCMs perform computations in a high-dimensional embedding space. This space represents abstract units of meaning, referred to as concepts, which correspond to sentences or utterances. The embedding space, called SONAR, is designed to be language- and modality-agnostic, supporting over 200 languages and multiple modalities, including text and speech.

Language- and Modality-agnostic Modeling: Unlike models tied to specific languages or modalities, LCMs process and generate content at a purely semantic level. This design allows seamless transitions across languages and modalities, enabling strong zero-shot generalization.

At the core of LCMs are concept encoders and decoders that map input sentences into SONAR’s embedding space and decode embeddings back into natural language or other modalities. These components are frozen, ensuring modularity and ease of extension to new languages or modalities without retraining the entire model.

Technical Details and Benefits of LCMs

LCMs introduce several innovations to advance language modeling:

Hierarchical Architecture: LCMs employ a hierarchical structure, mirroring human reasoning processes. This design improves coherence in long-form content and enables localized edits without disrupting broader context.

Diffusion-based Generation: Diffusion models were identified as the most effective design for LCMs. These models predict the next SONAR embedding based on preceding embeddings. Two architectures were explored:

One-Tower: A single Transformer decoder handles both context encoding and denoising.

Two-Tower: Separates context encoding and denoising, with dedicated components for each task.

Scalability and Efficiency: Concept-level modeling reduces sequence length compared to token-level processing, addressing the quadratic complexity of standard Transformers and enabling more efficient handling of long contexts.

Zero-shot Generalization: LCMs exhibit strong zero-shot generalization, performing well on unseen languages and modalities by leveraging SONAR’s extensive multilingual and multimodal support.

Search and Stopping Criteria: A search algorithm with a stopping criterion based on distance to an “end of document” concept ensures coherent and complete generation without requiring fine-tuning.

Insights from Experimental Results

Meta AI’s experiments highlight the potential of LCMs. A diffusion-based Two-Tower LCM scaled to 7 billion parameters demonstrated competitive performance in tasks like summarization. Key results include:

Multilingual Summarization: LCMs outperformed baseline models in zero-shot summarization across multiple languages, showcasing their adaptability.

Summary Expansion Task: This novel evaluation task demonstrated the capability of LCMs to generate expanded summaries with coherence and consistency.

Efficiency and Accuracy: LCMs processed shorter sequences more efficiently than token-based models while maintaining accuracy. Metrics such as mutual information and contrastive accuracy showed significant improvement, as detailed in the study’s results.

Conclusion

Meta AI’s Large Concept Models present a promising alternative to traditional token-based language models. By leveraging high-dimensional concept embeddings and modality-agnostic processing, LCMs address key limitations of existing approaches. Their hierarchical architecture enhances coherence and efficiency, while their strong zero-shot generalization expands their applicability to diverse languages and modalities. As research into this architecture continues, LCMs have the potential to redefine the capabilities of language models, offering a more scalable and adaptable approach to AI-driven communication.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

🚨 Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence….

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.

Be the first to comment