RAG-Check: A Novel AI Framework for Hallucination Detection in Multi-Modal Retrieval-Augmented Generation Systems

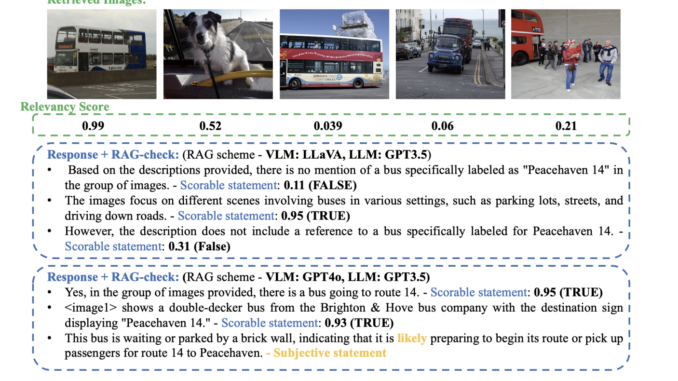

Large Language Models (LLMs) have revolutionized generative AI, showing remarkable capabilities in producing human-like responses. However, these models face a critical challenge known as hallucination, the tendency to generate incorrect or irrelevant information. This issue […]